Ampere scales CPU to 256 cores and partners with Qualcomm on cloud AI

Ampere announced its AmpereOne chip family will grow to 256 cores. And the company will also work with Qualcomm on cloud AI accerlators. …

Server CPU designer Ampere Computing announced its AmpereOne chip family will grow to 256 cores by next year. And the company will also work with Qualcomm on cloud AI accerlators.

The new Ampere centralized processing unit (CPU) will provide 40% more performance than any CPU currently on the market, said chief product officer Jeff Wittich, in an interview with VentureBeat.

Santa Clara, California-based Ampere will work with Qualcomm Technologies to develop a joint solution for AI inferencing using Qualcomm Technologies’ high-performance, low power Qualcomm Cloud AI 100 inference solutions and Ampere CPUs.

Ampere CEO Renee James said the increasing power requirements and energy challenge of AI is bringing Ampere’s silicon design approach around performance and efficiency into focus more than ever.

GB Event

Countdown to GamesBeat Summit

Secure your spot now and join us in LA for an unforgettable two days experience exploring the theme of resilience and adaptation. Register today to guarantee your seat!

“We started down this path six years ago because it is clear it is the right path,” James said. “Low power used to be synonymous with low performance. Ampere has proven that isn’t true. We have pioneered the efficiency frontier of computing and delivered performance beyond legacy CPUs in an efficient computing envelope.”

Data center energy efficiency

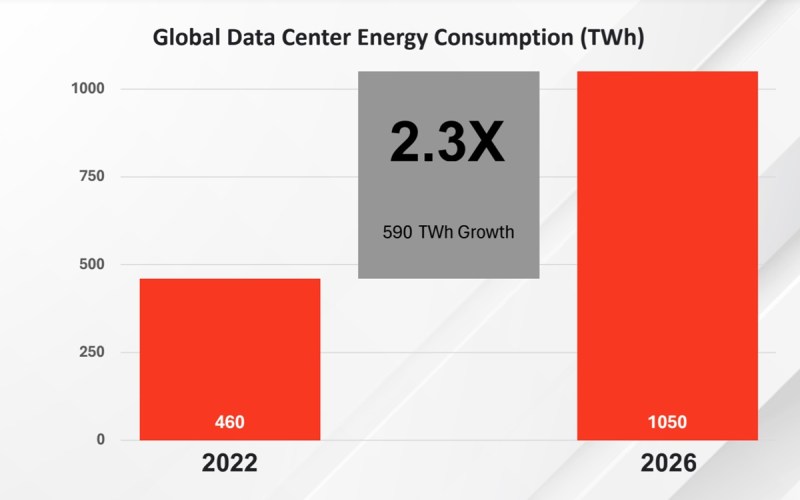

James said the industry faces the growing problem of the rapid advance to AI: energy.

“The current path is unsustainable. We believe that the future datacenter infrastructure has to consider how we retrofit existing air-cooled environments with upgraded compute, as well as build environmentally sustainable new datacenters that fit the available power on the grid. That is what we enable at Ampere,” James said.

Wittich echoed James’ comments.

“Why did we build a new CPU? It was to solve the growing power problem in data centers — the fact that data centers are consuming more and more power. It’s been a problem. But it’s even a bigger problem today than it was a couple of years ago because now we have AI as a catalyst to go and consume even more power,” Wittich said. “It’s critical that we create solutions that are more efficient. We’re doing this in general purpose compute. We’re doing it in AI as well. It’s really imperative that we build broad horizontal solutions that involve a lot of ecosystem partners so that these are solutions that are broadly available and solve the big problems, not just solve power consumption per se.”

Wittich shared Ampere’s vision for what the company is referring to as “AI Compute”, which incorporates traditional cloud native capabilities all the way to AI.

“Our Ampere CPUs can run a range of workloads – from the most popular Cloud Native applications to AI. This includes AI integrated with traditional Cloud Native applications, such as data processing, web serving, media delivery, and more,” Wittich said.

A big roadmap

James and Wittich also both highlighted the company’s upcoming new AmpereOne platform by

announcing a 12-channel 256 core CPU is ready to go on the TSMC N3 manufacturing process node. Ampere designs chips and works with external foundries to manufacture them. The previous chip that was announced in May 2023 had 192 cores. It went into production last year and is now in the market.

Ampere is working together with Qualcomm Technologies to scale out a joint solution featuring

Ampere CPUs and Qualcomm Cloud AI100 Ultra. This solution will tackle LLM inferencing on the

industry’s largest generative AI models.

With Qualcomm, Wittich said Ampere is working on a joint solution to make really efficient CPUs. They have really efficient high performance accelerators for AI. Their cloud AI 100 Ultra cards are really good at AI in everything, especially on really large models, like hundreds of billions of parameter models.”

He said that when you get such models, you might want a specialized solution like an accelerator. And so Ampere is working with Qualcomm to optimize a joint solution, dubbed a super micro server, which will be validated out of the box and be easy for customers to adopt, he said.

“It’s an innovative solution for people in the AI inferencing space, Wittich said. “We do some pretty cool work with Qualcomm.”

The expansion of Ampere’s 12-channel platform with the company’s upcoming 256 core AmpereOne CPU. It will utilize the same air-cooled thermal solutions as the existing 192 core AmpereOne CPU and deliver more than 40% more performance than any CPU in the market today, without exotic platform designs. The company’s 192-core 12-channel memory platform is still expected later this year, up from the eight-channel memory before.

Ampere also said that Meta’s Llama 3 is now running on Ampere CPUs at Oracle Cloud. Performance

data shows that running Llama 3 on the 128 core Ampere Altra CPU with no GPU delivers the same performance as an Nvidia A10 GPU paired with an x86 CPU, all while using a third of the power.

Ampere announced the formation of a UCIe working group as part of the AI Platform Alliance, which started back in October. As part of this, the company said it would build on the flexibility of its CPUs by utilizing the open interface technology to enable it to incorporate other customer IP into future CPUs.

Competition is good

The execs provided new details on AmpereOne performance and original equipment manufacturer (OEM) and original device manufacturer (ODM) platforms. AmpereOne continues to carry forward Ampere’s performance per watt leadership, outpacing AMD Genoa by 50% and Bergamo by 15%. For datacenters looking to refresh and consolidate old infrastructure to reclaim space, budget, and power, AmpereOne delivers up to 34% more performance per rack.

The company also disclosed that new AmpereOne OEM and ODM platforms would be shipping within a few months.

Ampere announced a joint solution with NETINT using the company’s Quadra T1U video processing chips

and Ampere CPUs to simultaneously transcode 360 live channels along with real-time subtitling

for 40 streams across many languages using OpenAI’s Whisper model.

In addition to existing features like Memory Tagging, QOS Enforcement and Mesh Congestion Management, the company revealed a new FlexSKU feature, which allows the customers to use the same SKU to address both scale-out and scale-up use cases.

Ampere has been working with Oracle to run huge models in the AI cloud, bringing down costs 28% and consuming just a third of the power as rival Nvidia solutions, Wittich said.

“Oracle saves a lot of power. And this gives them more capacity to deploy more AI compute by running on the CPU,” he said. “That’s our AI story and how it all fits together.”

The savings let you run with 15% less servers, 33% Less racks, and 35% less power, he said.