Databricks makes building real-time ML apps easier with new service

Databricks today launched serverless real-time inference to make building and deploying real-time ML apps easier for enterprises. …

San Francisco-headquartered Databricks, which provides a data lakehouse platform for storing and mobilizing disparate data, today debuted serverless real-time inference capabilities. The company says the move will make deploying and running real-time machine learning (ML) applications easier for hard-pressed enterprises.

Today, real-time ML is the key to product success. Enterprises are deploying it across a range of application use cases — from recommendations to chat personalization — to take immediate actions based on streaming data and improve revenues. However, when it comes to full-life-cycle support for AI application systems, things can get tricky.

Teams have to host their ML models on the cloud or on-premises, and then make their functions accessible via API to make them work within the application system. The process is often dubbed “model serving.” It demands creation of a fast and scalable infrastructure that supports not only the main serving need, but also feature lookups, monitoring, automated deployment and model retraining. This results in teams integrating disparate tools, which increases operational complexity and creates maintenance overhead.

In fact, most data scientists handling this task end up spending a large chunk of their time and resources just on stitching together and maintaining data, ML and serving infrastructure in the ML life cycle.

Model serving with serverless real-time inference

To address this particular gap for its customers, Databricks has launched model serving with serverless real-time inference in GA. It is an important step for a company that has led the development of cloud-based Spark data processing methods.

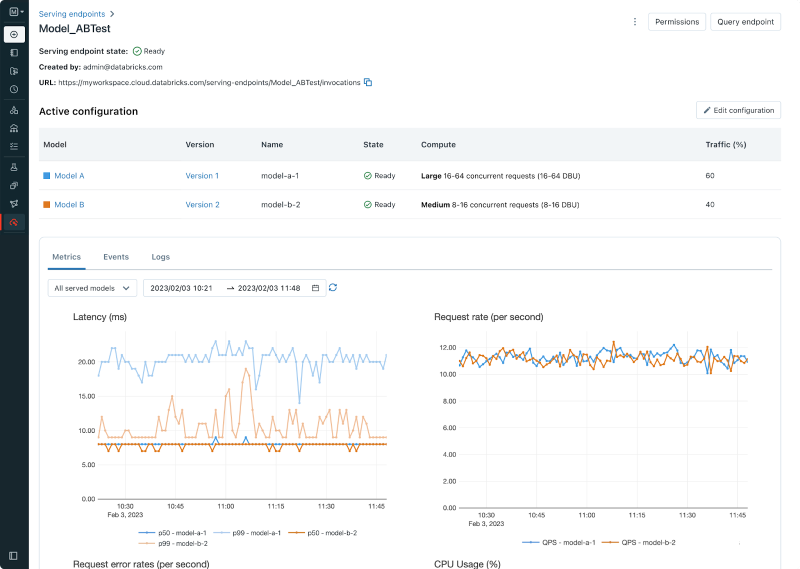

As Databricks explains, the new service is fully-managed, production-grade service that exposes MLflow machine learning models as scalable REST API endpoints. It does all the heavy lifting associated with the process, starting from configuring the infrastructure to managing instances, maintaining version compatibility and patching versions. The service dynamically grows and shrinks resources, ensuring cost-effectiveness and maximum scalability — all while providing high availability and low latency.

With this offering, Databricks notes, enterprises can reduce infrastructure overhead and accelerate their teams’ time to production. Plus, its deep integration with various data lakehouse services, like feature store, ensures automatic lineage, governance and monitoring across data, features and model life cycle. This means teams can manage the entire ML process, from data ingestion and training to deployment and monitoring on a single platform, creating a consistent view across the ML life cycle that minimizes errors and speeds up debugging.

The time and resources saved with model serving can instead be used to build better-quality models faster, Databricks noted. Chris Sawtelle, engineering manager at Barracuda Networks, and Gyuhyeon Sim, CEO at Letsur AI, also noted similar advantages.

Sawtelle said Databricks’ model serving enables the Barracuda team to manage, deploy and monitor ML models in the same pipeline. This allows ML engineers to focus solely on producing the most effective models without spending time on model availability and operations.

Meanwhile, Sim noted the fast autoscaling of the service keeps costs low while still allowing them to scale as traffic demand increases. “Our team is now spending more time building models solving customer problems rather than debugging infrastructure-related issues,” he added.

The general availability of the service comes as the latest move from Databricks to provide everything enterprises need to quickly and easily build models using the data stored in its lakehouse. The company has also launched industry-specific versions of its platform to better serve clients in sectors like healthcare and compete strongly against players like Snowflake and MongoDB.

Databricks has raised a hefty $3.5 billion over nine funding rounds. Its customer base includes giants like AT&T, Columbia, Nasdaq, Grammarly, Rivian and Adobe.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.